STEELTOPIA, your reliable business partner

-

About

-

Our Brand

-

Products

-

Community

STEELTOPIA

Community

Blog

Blog

The history of steel - 2

- Writer

- STEELTOPIA

- Date

- 23-08-31

Secondary Steelmaking

After World War II, there arose a necessity to enhance the properties of steel. A significant advancement was the continued refinement of steel at the ladle after tapping in the secondary refining process. During the period between 1950 and 1960, initial developments involved the practice of stirring the liquid metal in the ladle with argon gas. This approach resulted in decreased variations in the temperature and composition of the metal, facilitated the rise of solid oxide inclusions to the surface for integration with slag, and effectively removed dissolved gases such as hydrogen, oxygen, and nitrogen. However, gas stirring alone couldn't sufficiently remove hydrogen to acceptable levels when producing large ingots.

In the mid-1950s, with the commercialization of large vacuum pumps, it became possible to relocate the ladle to a large evacuated chamber and effectively remove hydrogen to levels below 2ppm using argon gas, leading to a refined degassing process. These improved degassing systems of this kind were developed in Germany between 1955 and 1965.

The earliest ladle addition method was the Perrin process, developed in 1933. In this method, steel was poured into ladles containing liquid reducing slag, inducing vigorous mixing that caused sulfur to move from the metal to the slag. However, this process was costly and inefficient. Subsequently, during the post-war period, an approach was established in Japan wherein inert gas was used to inject desulfurizing agents like calcium, silicon, and magnesium through a lance into the existing liquid steel in the ladle. This method found utility primarily in producing steel for gas and oil pipelines.

Alloy Steel

Alloying elements are added to steel to enhance specific properties such as strength, wear resistance, and corrosion resistance. While alloy theory has been developed, a significant portion of commercially used alloyed steel has been developed through experimental approaches sometimes inspired by intuitive guesses. The earliest experimental research on adding alloys to steel dates back to 1820 when British individuals James Stodart and Michael Faraday added gold and silver to steel to improve corrosion resistance. Although these mixtures were not commercially feasible, they laid the foundation for the idea of adding chromium to steel to enhance corrosion resistance (see stainless steel reference below).

Hardening and Strengthening

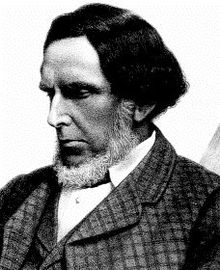

(Ronert F. Mushet)

The origins of the first commercially used alloy steel can generally be traced back to 1868 when British individual Robert F. Mushet discovered that adding tungsten to steel significantly increased its hardness even after air cooling. This material became the foundation for the development of tool steels used in metalworking.

Around 1865, Mushet also found that by adding manganese to Bessemer steel, it was possible to cast ingots without the need for blowholes. While he was aware that manganese mitigated brittleness caused by sulfur presence, in 1882 he developed steel containing 12-14% manganese and 1% carbon, greatly enhancing wear resistance.

The true driving force behind alloy steel development was military application. Around 1889, steel with 0.3% carbon and 4% nickel was produced. Shortly after, the addition of chromium further improved its properties and found extensive use in battleship armor plates. In 1918, the addition of molybdenum to this steel was discovered to enhance ductility.

A general understanding of why alloying elements affect hardness depth (hardenability) or methods behind it emerged from research primarily conducted in the United States during the 1930s. Understanding why characteristics change with tempering was developed through studies utilizing transmission electron microscopy between 1955 and 1965.

Microalloyed steel

An important development immediately following World War II was the improvement in the composition of steel for easily weldable plates and sections. The driving force behind this effort was the use of welding for mass production, which was faster than riveting, as demonstrated by the damage to the aft plates of the mass-produced Liberty ships during the war. This improvement was achieved by increasing the manganese content to 1.5% and maintaining the carbon content below 0.25%.

Generally, the group of steels known as High-Strength Low-Alloy (HSLA) steel aimed to improve the general properties of structural steel without significantly increasing costs. By adding small amounts of alloying elements, this group achieved similar goals. By 1962, the term "microalloyed steel" was introduced for compositions of structural steel containing 0.01-0.05% niobium. Similar steels containing vanadium were also produced.

The period from 1960 to 1980 marked a significant advancement in microalloyed steel. By combining temperature control during rolling with alloying, the yield strength was increased to nearly twice that of conventional structural steel.

Stainless Steel

The attempt to enhance the corrosion resistance of steel by adding alloying elements is not surprising. However, it was quite astonishing that a commercially successful material wasn't produced until 1914. This material, composed of 0.4% carbon and 13% chromium, was developed by Harry Brearley of Sheffield for producing cutlery.

Chromium was identified as a chemical element in 1798 and was extracted in the form of iron-chromium-carbon alloys. This material was first used in alloy experiments by Stodart and Faraday in 1820. The same material was employed by John Woods and John Clark in 1872 to create an alloy with 30-35% chromium content. Although its improved corrosion resistance was mentioned, steel was never actually produced. Success became feasible when Hans Goldschmidt in Germany discovered a method to produce low-carbon ferrochrome in 1895.

The correlation between chromium content in steel and its corrosion resistance was established in Germany by Philip Monnartz in 1911. During the wartime period, it became clear that a minimum of 8% chromium needed to be dissolved in the iron matrix. When combined with carbon in the form of carbides and exposed to air, a protective layer of chromium oxide forms on the surface of the steel. In Brearley's steel, 3.5% chromium was bound to carbon, leaving enough chromium to impart corrosion resistance.

The addition of nickel to stainless steel was patented in Germany in 1912, but it wasn't until 1925 that the material was developed, using steel with 18% chromium, 8% nickel, and 0.2% carbon content. This material began to be used in the chemical industry from 1929 and became known as the 18/8 austenitic grade.

By the late 1930s, the recognition of the usefulness of austenitic stainless steel in high-temperature applications had grown, and modified compositions were used in early jet aircraft engines during World War II. The basic composition from that period is still used for high-temperature services. Duplex stainless steel was developed in the 1950s to meet the chemical industry's demands for high strength coupled with corrosion resistance and pitting resistance. This alloy consists of a microstructure with about half ferrite and half austenite, with a composition of 25% chromium, 5% nickel, 3% copper, and 2% molybdenum.

Forming and Casting

Early metalworkers, such as blacksmiths, used hand tools to shape iron into finished forms. Essentially, these tools consisted of tongs to hold the metal on the anvil and a hammer to shape it. Converting iron blooms into wrought iron bars required significant hammering. While water-powered hammers were used in Germany until the 15th century, heavy hammers capable of handling 100kg blooms didn't emerge until the 18th century. Slitting mills for creating thin strips and rolling mills for shaping bars into sheets were introduced around the same time. Rolls with grooves for producing iron bars were patented by John Purnell in 1766 and were operated by a 35 horsepower waterwheel.

Steel forming operations were relatively small-scale until the Bessemer process was introduced. Liquid metal was poured into large iron ingot molds with a square cross-section of 700mm and a length of 1.5 to 2 meters. Such ingots weighed about 7 tons. After solidification, the ingots were removed from the molds, reheated, and then subjected to hot rolling in a primary (blooming) mill to reduce their size, producing billets with a cross-section of approximately 100mm. These billets, measuring 3 to 4 meters in length, were then cut and formed the starting material for rolling into bars, beams, rods, and strips.

This type of billet production continued until the 1960s, when significant changes occurred with the development of continuous casting technology. By using the direct transfer of liquid metal from the furnace to the casting machine, there was no need to pour large ingots or reheat them, which required a lot of energy. The need for expensive blooming mills to reduce ingots into a usable form for casting was eliminated. Continuous casting was initially used in non-ferrous metals in the 1930s and was experimented with in the 1950s by steel mills in the United Kingdom, the United States, and the Soviet Union, particularly by United States Steel Corporation. By 1965, 2% of the world's total steel production was done through continuous casting. This proportion increased to 5% in 1970 and reached 64% of all globally produced steel by 1990 (over 90% in Japan).

Continuous casting partially contributed to the development of a new type of steel mill known as the "mini-mill" after 1970. Here, steel was continuously cast into small-diameter billets, using scrap as feedstock and then rolled into bars or drawn into wire after being produced in electric furnaces. These mini-mills were established in industrial regions where scrap was readily available, while existing steel plants were located in proximity to iron ore and low-cost energy sources.

The introduction of the puddling process allowed for the first production of cast steel. Steel products were cast in Germany and Switzerland from 1824, and in 1855, steel gear wheels were cast in Sheffield. In the United States, steel casting began in Pittsburgh in 1871.

The puddling process continued as a major melting method until 1893, when the side-blown Bessemer-type converter known as the Tropenas converter was developed in Sheffield. Electric melting in acid furnaces was pioneered in Switzerland in 1907, and today electric furnaces are mainly used for producing steel castings.

Research on foundry practice, which greatly affects the quality of cast products, began in the United States in 1919, and international standards for foundry materials were published between 1924 and 1928. In the 1920s, X-ray methods were introduced in the Soviet Union to evaluate the soundness of cast steel, and in the 1930s, the magnetic particle testing method was introduced.

Tube

The demand for tubes used in gas delivery increased in the early 19th century with the development of the gas industry. In 1824, the pressure butt-welding method of heated curved strips was developed in the United Kingdom, and in 1832, tube production factories were established in the United States. Similar processes were still used to produce seamless tubing. Improvements to the process of butt-welding with heat and pressure led to the development of electric resistance welding of seams in the United States around 1921. Most welded tubes, including large-diameter tubes formed by spiral-welding continuous strips, are still produced using this method.

Seamless tubing without joints includes the piercing of round billets. This process was developed in the United Kingdom in 1841. In 1886, the Mannesmann company in Germany greatly improved this process, which involves pushing the billet vertically while inserting it into a piercing mandrel called a "Mandrel." This method is widely used for both iron and non-iron metals.

Forging

In the late 19th century, as the size of ingots increased, large hammer forging, mimicking the hammering action of early blacksmiths, was developed. For really massive parts, the first hydraulic press forging was created in 1861 in the United Kingdom and introduced to the United States in 1877. In this forging process, the upper forging die is pressed against the workpiece on the lower anvil by the action of a hydraulic piston.

Plate and Sheet

Plates are produced through a technology developed in the early 19th century called hot rolling. To create plates, steel is cold-rolled and a series of rollers are arranged in series to reduce thickness even after passing through the rolling mill once. The first plants of this type were established in the United States in 1904.

When producing wide and thin sheets, there is a tendency for the small-diameter rolls needed for thin materials to bend during use, resulting in sheets thicker at the center than at the edges, causing difficulties. This issue was overcome with the introduction of larger backup rolls after World War II. In extreme cases, a cluster mill, consisting of small working rolls, is supported by nine larger-diameter backup rolls.

The information provided on this webpage is intended solely for informational purposes. Steeltopia does not make any explicit or implied representations or warranties regarding the accuracy, comprehensiveness, or validity of this information.

HOME

HOME